Sometimes I get to work on public issues and share reports. North Macedonia has important challenges as it has recently entered NATO and seeks entry into the EU. It is a small country of 2+ million people, with closely divided political parties and a sizable Albanian minority. The citizenry wants to escape a system of organized corruption and develop its economy, but the two main parties offer different paths. And, of course, there is the pandemic. Elections in April were postponed and finally conducted in July, under COVID-19 health protection protocols. Here are the public March 2020 and June 2020 reports.

Second Survey of Civic Leadership Programs

We recently wrapped up our work on a second survey of senior executives of Civic Leadership Programs. Click here for the memo. With this survey, we have demonstrated our methodology is sound. In the memo, you will find interesting insights on a variety of topics including professional development topics, use of endowments and commonly held views on leadership programs.

Our next step is to conduct a pilot program in which civic leadership programs will collaborate with us on developing evidence-based measurements of impact in communities and better understanding the mechanisms for engaging alumni of core programs.

Thanks again to the many executives who participated in the survey.

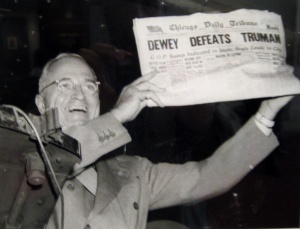

HOT OFF THE PRESS: British Inquiry into Inaccurate 2015 Election Polling

Most Americans are familiar with the photo of President Harry Truman, in triumph after the 1948 presidential election, holding up the Chicago Daily Tribune with the headline declaring his opponent NY Gov. Thomas Dewey was predicted to win. The pollsters and the news reporter, in the early years of surveys, screwed up.

Truman pulled in 303 electoral votes and 49.6% of votes cast, Dewey received 189 and 45.1% and Strom Thurmond collected 39 and 2.4%. The pollsters used a poor sampling methodology, and the industry made adjustments going forward.

And, it happened again! In the May 7th, 2015 British general election, late public polls indicated the Conservative and Labour parties were in a close race, 34% each. Nope. The Conservatives collected 38% and Labour 31%.

So, what happened? The British Polling Council (BPC) and the Market Research Society just released their 115-page “Report of the Inquiry into the 2015 British general election opinion polls.” Click here for the report.

The BPC is an association of polling organizations that publish polls. The self-described objectives are to ensure standards of disclosure that provide consumers of survey results that enter the public domain with an adequate basis for judging the reliability and validity of the results.

So, before I jump into the findings, here is my perspective (things I have said for years) as to why this matters:

- The news media places far too much significance on the horserace early in the campaign season. It is a lazy way for the news media to get headlines. The result: Donald Trump touting horserace polls and voters – who do not have time to delve into the details (isn’t that a role of news reporters?) – following the supposed front runner.

Campaign professionals, including veteran news reporters and editors, know that in the early phases of a campaign, the guts of the polls are more important than the horserace. These are the private polls that are not shared with the public. What is the voter sentiment? What are they seeking in their next leader? These are the questions that are supposed to identify trends such as who is angry in the electorate, how many of them, reasons why they are angry and what will appeal to them. Without the deeper analysis, the Republican Party and the media were blind-sided by the bluster of Donald Trump. And, the result is an American political system in upheaval and hand-wringing among domestic voters and international viewers.

- However, late in a close campaign, the horserace does matter. In the final weeks of an election, the campaigns should be well in tune with the electorate and, based on the horserace, know how to deploy their best messages and resources for Election Day. These final public polls – supposedly the result of our modern knowledge economy — are critical in providing stability in any changeover in national governance.

- But, what if the horserace polls are wrong? That is a problem. If the polls do not match the results, it raises questions as to the validity of the election results. There are known problems in polling, often relating to the increased costs in reaching random samples of voters. Many voters are in cell-phone only homes; reaching these voters is expensive. People with landlines are also answering surveys at increasingly lower rates, which also raises the cost of conducting a voter survey. Are there other reasons, beyond cost, that are driving these problems? And, for some additional context, there is also the challenge of polls not being able to track voters deciding late in an election (this can be done, but not something I have seen public polling organizations do – anyone want to hire me to do this?).

- So, this inquiry matters. The public election horserace polls provide stability to the electorate and world economies. And, the reputation of polling has similar implications among the corporations and organizations that rely on surveys. As voters and users of survey research, we need confidence in our survey systems. So, this inquiry will provide important lessons of the source of the problem, and, hopefully, guidance for resolving the problem.

The British Inquiry into Inaccurate 2015 Election Polling:

I am sure colleagues smarter than me will weigh in with more insights. But, here is my interpretation of the findings of the BPC:

Reliable public election polling is expensive. The pollsters in the UK took shortcuts that do not work if you are looking to accurately predict a national election. If you want to poll for the public for a national election and focus on the horserace, spend your budget on less but more accurate polling. The problems were avoidable.

If you are interested in the details, here are some major details I gleaned from the report:

- The BPC could not find a singular problem other than the polls consistently had too many Labour voters and too few Conservatives. They even worked through a process of elimination, going through eight other possible sources of consistent error (i.e. intentional misreporting, major changes in late-deciding voters, voters misleading in their answers, unexpected changes in voter turnout, inaccuracy in expected voter models, etc.). They found no errors to account for such a high and consistent disparity.

- THE ULTIMATE PROBLEM IS COST. The BPC provides criticism of both online surveys and telephone surveys that were conducted prior to the UK election for cutting corners due to cost in ways that changed the composition of survey respondents.

- ONLINE SAMPLES ARE POOR FOR ACCURATELY MEASURING GENERAL POPULATION ATTITUDES, TO THE POINT WHERE FIXING THE PROBLEM ADDS SO MUCH COST THEY MIGHT AS WELL CONDUCT A TELEPHONE SURVEY. Many of the polls examined by the BPC were conducted online with opt-in panels—people who agree to be included in online polling samples. It is significantly less expensive than a random digit dialing (RDD) telephone survey.

There have been internal arguments in the opinion research world for decades about whether the opt-in panels can accurately mimic the accuracy of RDD. From the mid-1960s to mid-2000s, RDD was the gold standard for most closely achieving true random sampling of a first-world voter population (the new gold standard includes manually dialing cell phone numbers). Along with the randomness of being picked for a survey in RDD (as opposed to opt-in panels), the use of a flesh-and-blood interviewer is considered essential to minimizing the inherent sampling and other errors in a survey. A professional interviewer is trained to get people to respond who might initially decline to participate.

There is no magical statistical formula for filling the differences in opinions between people who readily agree to take a poll and people who need some cajoling to take a poll. Some firms conducting studies online have tried different statistical techniques to patch together a proxy for a random sample. Some claim their models are adequate, but, to me, the failure in the UK demonstrates otherwise. While these methods are decent for testing experimental hypotheses in surveys, I believe they are flawed when trying to provide the general public a reliable estimation of election preferences.

- TELEPHONE SAMPLES CAN ALSO HAVE PROBLEMS BUT ARE MORE EASILY FIXED. It is well-documented that RDD telephone surveys of voters have become increasingly expensive because they get fewer responses from voters with landline telephones (people are less willing to respond and they have more ways to spend their evenings than answering telephone calls) and they have to hand-dial cell phone numbers. The BPC report calls on future telephone surveys to increase the hours in which they call, require more callbacks and calls to people who do not initially respond, increase the percentage of cell-phone only users, and to spend more days completing their surveys. These are all tried-and-true elements of a well-conducted survey, and it is a shame that pollsters sharing important civic information have cut costs to the point of damaging public trust.

Do we have the same problem in the United States in our election polls? The opinion research industry went through a similar self-examination after the 2008 Presidential election and reached similar results. The good news is public polls are mainly conducted by telephone and do not have a consistent error. The bad news for news outlets is they are very expensive. As I have mentioned before, I hope this forces more reporters to examine polls more deeply than the horserace so we better understand the good and the bad in the minds of voters.

I have not seen this addressed in the BPC report, but why were major British newspapers reporting on online polls — known to have flaws — for their national elections?

On some final notes, it is likely polling budgets for news agencies have been overstretched with the long drawn out primaries in both parties; we will see fewer public polls through the conventions, and possibly in the fall election season. As I have shared before, Gallup announced they made a business decision to no longer conduct, at their own expense, public election polls focused on the horserace.

EXCLUSIVE: Release of the first-ever survey of Civic Leadership Programs (CLPs)

Here it is, the first-ever methodologically-rigorous, random sample survey of civic leadership programs. Click here for the research plan, the memorandum including overall results and the crosstabs.

Thank you to the 120 senior executives who participated in this survey. In appreciation, they received the results a few weeks ago.

My hope is, for those who are trying to move your CLP forward, that this survey demonstrate the utility of using surveys to guide organizations. While this survey covers a sliver of potential for CLPs, it reveals that opinion research – surveys – can help individual CLPs be more successful and drive more impact in their community. The average CLP is more than 25 years old and has more than 750 alumni or graduates of their core program. At this size, the CLPs are too large to not actively pursue strategies to increase their impact in the communities.

Survey of Civic Leadership Programs : Alumni Activities in Leadership Organziations

In this short survey, we focus largely on alumni activities in CLPs, and break them down by into six categories:

- Management of Alumni Relations: Some broad organizational structure for engaging alumni;

- Role in CLP Management: Ways in which alumni may be involved in managing the CLPs;

- Staffing of Alumni Relations: Investment in staff devoted to alumni relations;

- Role in Fundraising and Outreach: Broad roles in which alumni are used for development and recruitment;

- Programming Offerings: General ways programs are designed for alumni; and,

- Communications: Ways in which the CLP communicates with alumni.

- We also created an index to categorize the activities of CLPs into low, medium and high performing, and related the categories to whether CLPs are set up to take on challenging community topics.

So, please review the materials and see how my research is planned, executed and analyzed. Enjoy!

Reading Between the Lines at The Times

To start off 2016, The New York Times snuck in something new: a general population survey conducted mostly online. Until then, the newspaper only conducted polls with live interviewers calling landline and mobile telephone numbers.

John Broder, their editor of News Surveys, provided details about this change in a special Times Insider column. Methodology junkies will enjoy it. Click here to go to the article.

Was this a boring story for most readers? Probably.

But, here are some interesting things to consider:

Is this, in part, a trial-run by The NY Times to improve the reliability of the political horserace question, in time for the 2016 campaign? American readers, and the journalists who rely on them, are obsessed with the horserace – who is “winning” or “losing” and by how much? Donald Trump did not create this obsession, but in some fashion his candidacy reflects the American fascination with these questions.

Unfortunately, it has been well-documented in recent years the reliability of general population or voter surveys have deteriorated – at least, by the admission of The New York Times – due to, among other things, the increase in cell-phone only households. The resulting increase in costs to conduct reliable polls has been staggering, and certainly taken a toll on newsroom budgets. Side note: Would it also be fair to say that The New York Times just announced that their general public/voter polling is flawed?

We are lost in the political desert without reliable polls. News organizations are desperate to find a solution to return the credibility to these polls. They need to feed the public’s interest in the horserace. Likewise, journalists need the polls to help them write their political stories and analyses.

So, with this one poll and accompanying article, The New York Times might be preparing the general public for a new methodology for their political polling. I don’t think they can have it both ways for too long – some polling with an online component, and some without. And, from my perspective, the sooner a change is made to make general public surveys with an online component the gold standard, the sooner survey costs can come back to earth.

Of course, only The New York Times knows in their testing whether this methodology is ready for their front pages. But, why else would they publish the results and article?

If this methodology is sufficiently reliable, we will see the return of the horserace question across the front pages. Phew.

Will there be an impact for communication professionals? Will standards change to publish your polling? News editors across the country are asked daily to publish polls conducted by a 3rd party. No matter how interesting the results, the first question is whether the survey was methodologically sound. Journalists, often weak in math and statistics, have been trained over the years to sniff out bad polling.

Will The New York Times create a new standard for polling, whether good or bad, and will news editors across the country follow their lead?

Perhaps John Broder’s article will have broader implications than just be a dry review of survey methodologies.

What a great way to close out the year, LRI!

Check out the end-of-year message from Leadership RI. Click here. Mike Ritz and his staff are always on the go. What are they saying to their target audience – alumni, program participants, and funders?

- We have priorities, and we are willing to try new things – and take on hard challenges – to make progress.

- We are objectively measuring our actions and results.

- We are engaging the general public on important issues. This is part of our responsibility as leaders.

- We have a collaborative culture.

- We value and appreciate our staff.

- There is an important role for our alumni.

- We can have fun, too!

I am confident the program will continue to raise their impact and influence in 2016.

This is an excellent time for sharing accomplishments and thanks, as we all end a year of work and service. To pull it all together, it is also time to prepare a communication for early January to share priorities and expectations for 2016. If you have an “end-of-year” message you would like me to privately review or to share, feel free to send it to me at [email protected]. Happy End-of Year!

Poker with Nate Silver (Not): When to hold ‘em, and when to fold ‘em

Kudos to AAPOR (American Association for Public Opinion Research) for adding a new, fun twist to this year’s conference. They added a Texas Hold ‘Em charity event. Finally, some numbers-friendly competition with other pollsters, and only our dignity on the line.

Nate Silver, of FiveThirtyEight fame and a recipient of an AAPOR award, was kind enough to join us. Cool. As we entered the poker parlor, Nate and I were chatting, and I thought it would be a nice challenge to sit at his table — until Nate’s voice dropped to a whisper and shared, “it has been a while (playing poker), but I did play professionally for a few years.”

So, I moved to another table. Yes, Kenny Rogers’ ditty was playing in my head.

In the end, I failed to make it out of the first round, and Nate made it to the final group of three. Our celebrity host, a World Series of Poker veteran, pulled out some impressive moves and won the event.

Nate Silver’s first influential work was in baseball statistics. He would probably enjoy playing in my fantasy baseball league, full of other professional political/sports-junkie quant types. Too bad there are no seats left at our table (phew).